The latest version of the worshop (NeurIPS ENLSP 2024) is out, you can check it on the new website.

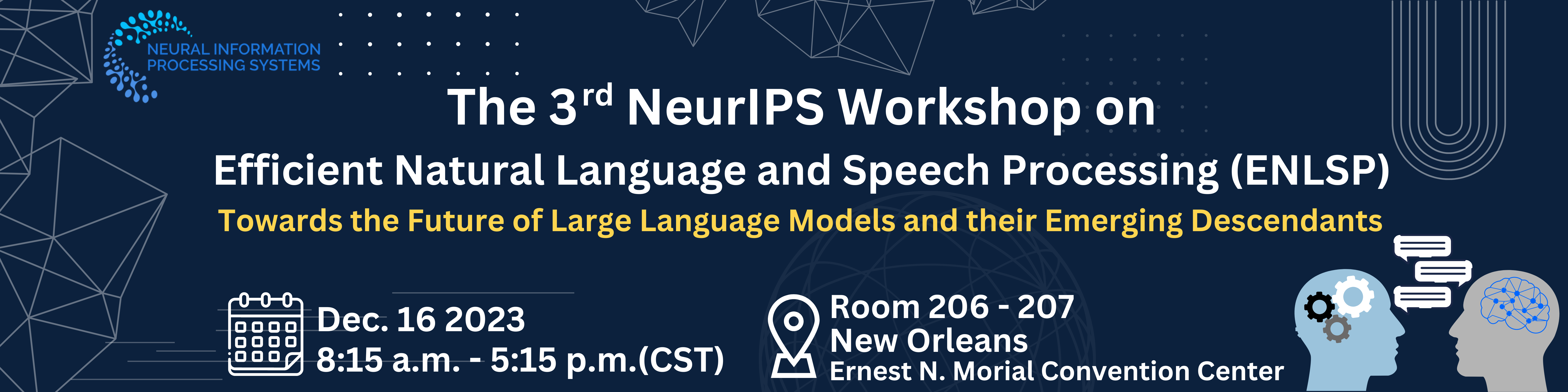

The third version of the Efficient Natural Language and Speech Processing (ENLSP-III) workshop will focus on the future of large language models and their emerging applications on different domains such as natural language, speech processing, and biological sequences; and the target is on how to make them more efficient in terms of Data, Model, Training, and Inference for real-world applications as well as academic research. The workshop program offers an interactive platform for gathering different experts and talents from academia and industry through invited talks, panel discussion, paper submissions, reviews, interactive posters, oral presentations and a mentorship program.

This will be a unique opportunity to discuss and share challenging problems, build connections, exchange ideas and brainstorm solutions, and foster future collaborations. The topics of this workshop can be of interest for people working on general machine learning, deep learning, optimization, theory and NLP & Speech applications.

Overview

With the emergence of large language and speech models (such as GPT-3, GPT-4, wav2vec, Hubert, wavLM, Whisper, PALM, LLaMA, and PALM 2) and then their variants which are fine-tuned on following instructions (such as Instruct-GPT, Alpaca, Dolly) and especially, conversational language models (such as ChatGPT, Bard, Vicuna, StableLM-Tuned, OpenAssistant), we have taken one significant step towards mimicking the human intelligence by machines. The contributions of Language models did not even stop at this level, their emerging usage in biological (protein language models such as protTrans models, ESM, protGPT2) and chemical domains (small molecule and polymer language models like SMILESBERT, polyBERT, and transPolymers) have further expanded their applications across various scientific disciplines. As a result, these models are revolutionizing the way we approach research and knowledge discovery, paving the way for more innovative breakthroughs in diverse fields.

This great success has come with the price of pre-training these large models on a huge amount of data, fine-tuning them with instruction-based data and human supervised fine-tuning data, and extensive engineering efforts. Despite the great success of these large models, it is evident that most of them are largely over-parameterized and their efficiency is under question. Lack of efficiency can largely limit the application of these advanced techniques in practice. Training, adapting or deploying these large models on devices or even cloud services with limited memory and computational power can be very challenging.

While these current achievements have paved the road for the faster progress of improving large foundation models from different aspects in the future, we need to address different efficiency issues of these models at the same time. For example, in natural language, it has been shown that larger model sizes reveal more zero-shot or few-shot (in-context learning) capabilities in handling different tasks. However, collecting data, pre-training and maintaining such large models can be very expensive. In terms of training data, it is still not very clear to what extent expanding the training data can improve these pre-trained models and whether we can compress pre-training data without much sacrificing the performance. The problem of efficiency becomes more critical when we think about pre-training multimodal models. At the time of deployment, designing proper prompts for different tasks can be very arbitrary and time consuming. Fine-tuning large language models with billions of parameters is very costly. One can think of transferring the knowledge of large foundation models to smaller models (by distillation or symbolic distillation) but still we do not have a straightforward recipe for this task. Furthermore, there is a debate in the literature that to what extent we can transfer the knowledge of powerful large black-box models such as ChatGPT to smaller models in specific or general domains. Additionally, due to the huge size of the foundation models, applying model compression techniques to them is not an easy task.

In the light of advances in large protein language models and their application in biology, this year there will be a special track focused on emerging protein language models, their pretraining, fine tuning, applications, and approaches for improving their efficiency.

Call for Papers

It is of vital importance to invest on future of large foundation models by enhancing their efficiency in terms of data, modeling, training and inference from different perspectives highlighted in the workshop. In this regard, we share some active research topics in this domain which might be of interest to the NeurIPS community to get their participation, ideas and contributions. The scope of this workshop includes, but not limited to, the following topics:

Efficient Pre-Training How can we reduce the cost of pre-training new models?

- Accelerating the pre-training process

- Efficient initialization and hyper-parameter tuning (HPT)

- Data vs. scale of pre-trained models

- Efficient Multimodal (e.g., text–speech) pre-trained models and efficiency issues related to it

- New efficient architectures (e.g. using sparse structures or mixture of experts (MoEs)) or new training objectives for pre-trained models

Efficient Fine-tuning Fine-tuning the entire parameters of large pre-trained models on downstream

tasks can be expensive and it is prone to overfitting.

- Efficient prompt engineering and in-context learning

- Parameter-efficient tuning solutions (i.e. training only a portion of the entire network)

- Accelerating the fine-tuning process (e.g. by improving the optimizer, and layer-skipping)

Data Efficiency Pre-training (with unlabeled data) and fine-tuning (with labeled data) are both data hungry processes. Labeling data and incorporating human annotated data or human feedback are very time consuming and costly. Here we would like to address "how to reduced the costs borne by data?"

- Sample efficient training, training with less data, few-shot and zero-shot learning

- How to reduce the requirements for human labeled data?

- Can we rely on machine generated data for training models? (e.g. data collected from ChatGPT)

- Data compression, data distillation

Efficient Deployment How can we reduce the inference time or memory footprint of a trained model for a particular task?

- Relying on in-context learning and prompt engineering of large language models or fine-tuning smaller models (by knowledge transfer from larger models)?

- Neural model compression techniques such as (post-training) quantization, pruning, layer decom- position and knowledge distillation (KD) for NLP and Speech

- Impact of different efficient deployment solutions on the inductive biases learned by the original models (such as OOD generalization, in-context learning, in-domain performance, hallucination).

Special track: Protein Language Models Emergence and the future of language models for biological sequences and how to make them more efficient.

- Protein language models and their applications

- Refining the pretraining algorithm and/or model architecture of LLMs to optimize performance in the protein domain.

- Optimizing the curriculum learning (order of pretraining data presentation) for more efficient pre-training or fine tuning of protein language models

- Efficient remote homology via dense retrieval using protein language models

- Combining sequence and 3D structure in pretraining or fine-tuning of the models

- Multi-modal language models for biological sequences.

Other Efficient Applications

- Knowledge localization, knowledge editing, or targeted editing/training of foundation models

- Efficient dense retrieval and search

- Efficient graphs for NLP

- Training models on device

- Incorporating external knowledge into pre-trained models

- Efficient Federated learning for NLP: reduce the communication costs, tackling heterogeneous data, heterogeneous models.

Submission Instructions

You are invited to submit your papers in our CMT submission portal (Link). All the submitted papers have to be anonymous for double-blind review. We expect each paper will be reviewed by at least three reviewers. The content of the paper (excluding the references and supplementary materials) should not be longer than 4 pages, strictly following the NeurIPS template style.

Authors can submit up to 100 MB of supplementary materials separately. Authors are highly encouraged to submit their codes for reproducibility purposes. According to the guideline of the NeurIPS workshops, already published papers are not encouraged for submission, but you are allowed to submit your ArXiv papers or the ones which are under submission. Moreover, a work that is presented at the main NeurIPS conference should not appear in a workshop. Please make sure to indicate the complete list of conflict of interests for all the authors of your paper. To encourage higher quality submissions, our sponsors are offering the Best Paper and the Best Poster Award to qualified outstanding original oral and poster presentations (upon nomination of the reviewers). Also, we will give one outstanding paper certification for our special track of protein language models. Bear in mind that our workshop is not archival, but the accepted papers will be hosted on the workshop website.

Important Dates:

- Submission Deadline: October 2, 2023 AOE

- Acceptance Notification: October 27, 2023 AOE

- Camera-Ready Submission: November 3, 2023 AOE

- Workshop Date: December 16, 2023

Confirmed Keynote Speakers

Samy Bengio

Apple

Tatiana Likhomanenko

Apple

Luke Zettelmoyer

University of Washington & Meta AI

Tara Sainath

Google

Sarath Chandar

MILA / Polytechnique Montreal

Kenneth Heafield

University of Edinburgh

Ali Madani

Profluent

Panelists

Nazneen Rajani

Huggingface

Minjia Zhang

Microsoft / Deepspeed

Tim Dettmers

University of Washington

Schedule

Title: (

KeyNote Talk) Deploying efficient translation at every level of the stack

Presenter: Kenneth Heafield

BioKenneth Heafield is the founder of Efficient Translation Limited and left the University of Edinburgh last week, where he was an Associate Professor. He trained a large language model on a trillion tokens in 2013 before it was cool. Then he ran EU projects ParaCrawl to gather data from the web, Bergamot to make fast translation for browsers, and HPLT to make language models large again.

AbstractPractical efficient neural networks combine several optimizations ranging from assembly code to network structure. Yet most papers about optimization start with an unoptimized baseline, omitting comparison even with simple methods like using a smaller network. Shared tasks force a different mentality, where each idea has to prove its worth against a highly optimized baseline. This informs our work on fast and small machine translation with latency under 20 ms for an average sentence. The models are now deployed in Firefox.

Title: (

KeyNote Talk) Simple and efficient self-training approaches for speech recognition

Presenter: Samy Bengio

Tatiana Likhomanenko

BioSamy Bengio is a senior director of machine learning research at Apple since 2021. Before that, he was a distinguished scientist at Google Research since 2007 where he was heading part of the Google Brain team, and at IDIAP in the early 2000s where he co-wrote the well-known open-source Torch machine learning library. His research interests span many areas of machine learning such as deep architectures, representation learning, vision and language processing and more recently, reasoning. He is action editor of the Journal of Machine Learning Research and on the board of the NeurIPS foundation. He was on the editorial board of the Machine Learning Journal, has been program chair (2017) and general chair (2018) of NeurIPS, program chair of ICLR (2015, 2016), general chair of BayLearn (2012-2015), MLMI (2004-2006), as well as NNSP (2002), and on the program committee of several international conferences such as NeurIPS, ICML, ICLR, ECML and IJCAI. More details can be found at http://bengio.abracadoudou.com.

Tatiana is a research scientist at the machine learning research team at Apple. Prior to Apple, she was an AI resident and later a postdoctoral research scientist in the speech recognition team, Facebook AI Research. Back in the day, Tatiana received a Ph.D. in mixed type partial differential equations from Moscow State University. For 4 years she worked on applications of machine learning to high energy physics as a researcher in the joint lab at Yandex and CERN, and later at the startup NTechLab, a leader in face recognition. The main focus of her recent research is transformers training and generalization, efficient speech recognition with less supervision and private federated learning.

AbstractSelf-training, or pseudo-labeling (PL), algorithms have recently emerged as a powerful strategy for semi-supervised learning in speech recognition in the era of transformers and large scale data. In this talk, we will walk you from the first successful pseudo-labeling algorithms based on teacher-student training, that alternates between training a model and generating pseudo-labels (PLs) with it, to continuous pseudo-labeling algorithms, where PLs are generated in end-to-end manner as training proceeds, improving training speed and the accuracy of the final model. We will discuss different aspects of PL algorithms to make it simple and resource efficient and overall what are the key components of such huge success :what exactly the model learns, how training dynamics changes, how speaker diversity and amount of hours affect training, and how training depends on the language models. Finally, we will show how pseudo-labeling can be used to train a model on a source language with labeled data and to fine-tune it on a target language with only unlabeled data.

Title: (

Spotlight 1) Query-Dependent Prompt Evaluation and Optimization with Offline Inverse RL

Presenter: Hao Sun

AuthorsHao Sun · Alihan Hüyük · Mihaela van der Schaar

AbstractIn this study, we aim to enhance the arithmetic reasoning ability of Large Language Models (LLMs) through zero-shot prompt optimization. We identify a previously overlooked objective of query dependency in such optimization and elucidate two ensuing challenges that impede the successful and economical design of prompt optimization techniques. We introduce Prompt-OIRL, which harnesses offline inverse reinforcement learning to draw insights from offline prompting demonstration data. Such data exists as by-products when diverse prompts are benchmarked on open-accessible datasets. With Prompt-OIRL, the query-dependent prompt optimization objective is achieved by first learning an offline reward model. This model can evaluate any query-prompt pairs without accessing LLMs. Subsequently, a best-of-N strategy is deployed to recommend the optimal prompt. Our experimental evaluations across various LLM scales and arithmetic reasoning datasets underscore both the efficacy and economic viability of the proposed approach.

Title: (

Spotlight 2) MatFormer:Nested Transformer for Elastic Inference

Presenter: Fnu Devvrit

AuthorsFnu Devvrit · Sneha Kudugunta · Aditya Kusupati · Tim Dettmers · Kaifeng Chen · Inderjit Dhillon · Yulia Tsvetkov · Hanna Hajishirzi · Sham Kakade · Ali Farhadi · Prateek Jain

AbstractTransformer models are deployed in a wide range of settings, from multi-accelerator clusters to standalone mobile phones. The diverse inference constraints in these scenarios necessitate practitioners to train foundation models such as PaLM 2 & Llama as a series of models of varying sizes. Due to significant training costs, only a select few model sizes are trained and supported, limiting more fine-grained control over relevant tradeoffs (latency, cost, accuracy). We introduce MatFormer, a nested Transformer architecture designed to offer elasticity in a variety of deployment constraints. Each Feed Forward Network (FFN) block of a MatFormer model is jointly optimized with a few nested smaller FFN blocks. This allows for the Mix'n'Match of model granularities across layers -- i.e., a trained universal MatFormer model enables extraction of hundreds of accurate smaller models which were never explicitly optimized. We empirically demonstrate MatFormer's effectiveness for decoder only language modeling and find that a 2.6B decoder-only MatFormer language model (MatLM) allows us to extract smaller models spanning from 1.5B to 2.6B, each exhibiting comparable validation loss and one-shot downstream evaluations to their independently trained counterparts. Finally, we showcase that speculative decoding with the accurate and consistent submodels extracted from MatFormer can further reduce inference latency.

Title: (

Spotlight 3) Decoding Data Quality via Synthetic Corruptions:Embedding-guided Pruning of Code Data

Presenter: Yu Yang

AuthorsYu Yang · Aaditya Singh · Mostafa Elhoushi · Anas Mahmoud · Kushal Tirumala · Fabian Gloeckle · Baptiste Roziere · Carole-Jean Wu · Ari Morcos · Newsha Ardalani

AbstractTBDCode datasets, often collected from diverse and uncontrolled sources such as GitHub, potentially suffer from quality issues, thereby affecting the performance and training efficiency of Large Language Models (LLMs) optimized for code generation. Previous studies demonstrated the benefit of using embedding spaces for data pruning, but they mainly focused on duplicate removal or increasing variety, and in other modalities, such as images. Our work focuses on using embeddings to identify and remove low-quality'' code data. First, we explore features oflow-quality'' code in embedding space, through the use of synthetic corruptions. Armed with this knowledge, we devise novel pruning metrics that operate in embedding space to identify and remove low-quality entries in the Stack dataset. We demonstrate the benefits of this synthetic corruption informed pruning (SCIP) approach on the well-established HumanEval and MBPP benchmarks, outperforming existing embedding-based methods. Importantly, we achieve up to a 3% performance improvement over no pruning, thereby showing the promise of insights from synthetic corruptions for data pruning.

Title: (

Spotlight 4) FlashFFTConv:Efficient Convolutions for Long Sequences with Tensor Cores

Presenter: Dan Fu

AuthorsDan Fu · Hermann Kumbong · Eric Nguyen · Christopher Ré

AbstractConvolution models with long filters have demonstrated state-of-the-art reasoning abilities in many long-sequence tasks but lag behind the most optimized Transformers in wall-clock time.A major bottleneck is the Fast Fourier Transform (FFT)---which allows long convolutions to run in $O(N \log N)$ time in sequence length $N$ but has poor hardware utilization.In this paper, we study how to optimize the FFT convolution.We find two key bottlenecks:the FFT does not effectively use specialized matrix multiply units, and it incurs expensive I/O between layers of the memory hierarchy.In response, we propose FlashFFTConv.FlashFFTConv uses a matrix decomposition that computes the FFT using matrix multiply units and enables kernel fusion for long sequences, reducing I/O.FlashFFTConv speeds up exact FFT convolutions by up to 6.54$\times$ over PyTorch and achieves up to 4.4$\times$ speedup end-to-end.Given the same compute budget, FlashFFTConv allows Hyena-GPT-s to achieve 2.3 points better perplexity and M2-BERT-base to achieve 3.3 points higher GLUE score---matching models with twice the parameter count.

Title: (

Spotlight 5) Ensemble of low-rank adapters for large language model fine-tuning

Presenter: Xi Wang

AuthorsXi Wang · Laurence Aitchison · Maja Rudolph

AbstractFinetuned LLMs often exhibit poor uncertainty quantification, manifesting as overconfidence, poor calibration, and unreliable prediction results on test data or out-of-distribution samples. One approach commonly used in vision for alleviating this issue is a deep ensemble, which constructs an ensemble by training the same model multiple times using different random initializations. However, there is a huge challenge to ensembling LLMs:the most effective LLMs are very, very large. Keeping a single LLM in memory is already challenging enough:keeping an ensemble of e.g. 5 LLMs in memory is impossible in many settings. To address these issues, we propose an ensemble approach using Low-Rank Adapters (LoRA), a parameter-efficient fine-tuning technique. Critically, these low-rank adapters represent a very small number of parameters, orders of magnitude less than the underlying pre-trained model. Thus, it is possible to construct large ensembles of LoRA adapters with almost the same computational overhead as using the original model. We find that LoRA ensembles, applied on its own or on top of pre-existing regularization techniques, gives consistent improvements in predictive accuracy and uncertainty quantification.

Title: (

KeyNote Talk) Branch-Train-Merge:Embarrassingly Parallel Training of Expert Language Models

Presenter: Luke Zettelmoyer

BioLuke Zettlemoyer is a Professor in the Paul G. Allen School of Computer Science & Engineering at the University of Washington, and a Research Director at Meta. His research focuses on empirical methods for natural language semantics, and involves designing machine learning algorithms, introducing new tasks and datasets, and, most recently, studying how to best develop self-supervision signals for pre-training. His honors include being named an ACL Fellow as well as winning a PECASE award, an Allen Distinguished Investigator award, and multiple best paper awards. Luke received his PhD from MIT and was a postdoc at the University of Edinburgh.

AbstractExisting language model (LM) training regimes entangle compute, data, and parameters, requiring expensive synchronous communication with massive supercomputers. This talk introduces a new algorithm called Branch-Train-Merge (BTM) that asynchronously trains LMs that are fundamentally modular. In BTM, components (or experts) of the LM are specialized to distinct domains in the training corpus, and experts are conditionally updated based on the domain of the incoming document. We show how BTM enables LMs that are rapidly customizable (with the ability to mix, add, or remove experts after training), embarrassingly parallel (requiring no communication between experts), and sparse (needing only a few experts active at a time for inference). Key to our proposal is exploring what constitutes the domains to which experts specialize, as well as reflecting on the data sources used to train LMs. Our new techniques chart a path towards collaborative and iterative LM development, where anyone can contribute and maintain experts at modest computational cost.

Title: (

KeyNote Talk) Knowledge Consolidation and Utilization (In)Ability of Large Language Models

Presenter: Sarath Chandar

BioSarath Chandar is an Assistant Professor at Polytechnique Montreal where he leads the Chandar Research Lab. He is also a core faculty member at Mila, the Quebec AI Institute. Sarath holds a Canada CIFAR AI Chair and the Canada Research Chair in Lifelong Machine Learning. His research interests include lifelong learning, deep learning, optimization, reinforcement learning, and natural language processing. To promote research in lifelong learning, Sarath created the Conference on Lifelong Learning Agents (CoLLAs) in 2022 and served as a program chair for 2022 and 2023. He received his PhD from the University of Montreal and MS by research from the Indian Institute of Technology Madras. For more details, please visit http://sarathchandar.in/.

AbstractLarge language models (LLMs) are becoming increasingly used in various downstream applications not only in natural language processing but also in various other domains including computer vision, reinforcement learning, and scientific discovery to name a few. This talk will focus on some of the fundamental limitations of using LLMs as task solvers. In the first half of the talk, I will show that LLMs cannot consolidate the knowledge that is spread across training documents. In the second half, I will show that while LLMs can acquire simple facts from the training data, they cannot utilize all the acquired facts while solving a new task and this utilization gap gets worse when the task distribution is very different from the training data distribution. I will also show that scaling will not solve both of these issues and argue for better pre-training procedures.

Title: (

Spotlight 6) LoDA:Low-Dimensional Adaptation of Large Language Models

Presenter: Jing Liu

AuthorsJing Liu · Toshiaki Koike-Akino · Perry Wang · Matthew Brand · Ye Wang · Kieran Parsons

AbstractParameter-Efficient Fine-Tuning (PEFT) has recently garnered significant attention, due to the enormous size of Large Language Models (LLM). Among various PEFT methods, Low-Rank Adaptation (LoRA) demonstrates comparable performance to full fine-tuning, despite having significantly fewer trainable parameters. In this work, we first generalize LoRA from a low-rank linear adaptation/mapping to low-dimensional, non-linear adaptation/mapping, called Low-Dimensional Adaptation (LoDA). We further propose LoDA+, which further improves the expressiveness of the non-linear adaptation and still uses almost the same number of tunable parameters as LoRA. Both LoDA and LoDA+ include LoRA as a special case. To improve computational efficiency at inference, we further propose R-LoDA(+) and S-LoDA(+), replacing the pre-trained weight matrix by its low-rank or sparse approximation, which is frozen during fine-tuning. Empirical evaluations on Natural Language Generation tasks show that LoDA(+) and some variants outperform LoRA as well as other baselines. We will release a package that facilitates the integration of LoDA(+) and their variants with PyTorch models.

Title: (

Spotlight 7) MultiPrompter:Cooperative Prompt Optimization with Multi-Agent Reinforcement Learning

Presenter: Dong-Ki Kim

AuthorsDong-Ki Kim · Sungryull Sohn · Lajanugen Logeswaran · Dongsub Shim · Honglak Lee

AbstractRecently, there has been an increasing interest in automated prompt optimization based on reinforcement learning (RL). This approach offers important advantages, such as generating interpretable prompts and being compatible with black-box foundation models. However, the substantial prompt space size poses challenges for RL-based methods, often leading to suboptimal policy convergence. This paper introduces MultiPrompter, a new framework that views prompt optimization as a cooperative game between prompters who take turns composing a prompt together. Our cooperative prompt optimization effectively reduces the problem size and helps prompters learn optimal prompts. We test our method on the text-to-image task and demonstrate its ability to generate higher-quality images than baselines.

Title: (

Spotlight 8) LoftQ:LoRA-Fine-Tuning-Aware Quantization for Large Language Models

Presenter: Yixiao Li

AuthorsYixiao Li · Yifan Yu · Chen Liang · Nikos Karampatziakis · Pengcheng He · Weizhu Chen · Tuo Zhao

AbstractQuantization is an indispensable technique for serving Large Language Models(LLMs) and has recently found its way into LoRA fine-tuning (Dettmers et al.,2023). In this work we focus on the scenario where quantization and LoRA fine-tuning are applied together on a pre-trained model. In such cases it is commonto observe a consistent gap in the performance on downstream tasks between fullfine-tuning and quantization plus LoRA fine-tuning approach. In response, wepropose LoftQ (LoRA-Fine-Tuning-aware Quantization), a novel quantizationframework that simultaneously quantizes an LLM and finds a proper low-rankinitialization for LoRA fine-tuning. Such an initialization alleviates the discrep-ancy between the quantized and full-precision model and significantly improvesthe generalization in downstream tasks. We evaluate our method on natural lan-guage understanding, question answering, summarization, and natural languagegeneration tasks. Experiments show that our method is highly effective and out-performs existing quantization methods, especially in the challenging 2-bit and2/4-bit mixed precision regimes. We will release our code.

Title: (

Spotlight 9) Improving Linear Attention via Softmax Mimicry

Presenter: Michael Zhang

AuthorsMichael Zhang · Kush Bhatia · Hermann Kumbong · Christopher Ré

AbstractLinear attentions are promising methods to improve Transformer efficiency. This improved efficiency is applicable to training linear Transformers from scratch, converting finetuned Transformers into linear versions that recover task-specific performance, and converting pretrained Transformers into linear versions for downstream transfer. However, linear attentions often lag behind softmax attention in performance. To address this gap, we identify two key empirical properties of softmax attention missing in linear attentions:low-entropy "spiky" weights and dot-product monotonicity. We thus introduce Hedgehog, a learnable linear attention trained to "mimic" softmax attention by minimizing cross-entropy between attention weights. Experiments show Hedgehog significantly closes the attention performance gap. Hedgehog closes 68.6% of the gap on WikiText-103 when training 125M-parameter linear Transformers from scratch, improving upon prior linear attentions by up to 6 perplexity points (PPL), and recovers >99% of GLUE points when converting finetuned BERT models, outperforming prior methods up to 8.7 points. By "linearizing" GPT-2, Hedgehog outperforms efficient Transformer alternatives, obtaining state-of-the-art 16.7 perplexity on WikiText-103.

Title: (

Spotlight 10) PaSS:Parallel Speculative Sampling

Presenter: Giovanni Monea

AuthorsGiovanni Monea · Armand Joulin · Edouard Grave

AbstractScaling the size of language models to tens of billions of parameters has led to impressive performance on a wide range of tasks. At generation, these models are used auto-regressively, requiring a forward pass for each generated token, and thus reading the full set of parameters from memory. This memory access forms the primary bottleneck for generation and it worsens as the model size increases. Moreover, executing a forward pass for multiple tokens in parallel often takes nearly the same time as it does for just one token. These two observations lead to the development of speculative sampling, where a second smaller model is used to draft a few tokens, that are then validated or rejected using a single forward pass of the large model. Unfortunately, this method requires two models that share the same tokenizer and thus limits its adoption. As an alternative, we propose to use parallel decoding as a way to draft multiple tokens from a single model with no computational cost, nor the need for a second model. Our approach only requires an additional input token that marks the words that will be generated simultaneously. We show promising performance (up to $30\%$ speed-up) while requiring only as few as $O(d_{emb})$ additional parameters.

Title: (

KeyNote Talk) LLMs for Protein Design:A Research Journey

Presenter: Ali Madani

BioAli Madani is the founder of Profluent Bio, an AI startup in protein design. Previously, he received his PhD from UC Berkeley and led machine learning research initiatives at Salesforce Research utilizing deep learning, language modeling, and computer vision. Most notably, Ali was the architect of the ProGen moonshot which demonstrated the first use of large language models for functional protein design.

AbstractTBD

Title: (

KeyNote Talk) End-to-End Speech Recognition:The Journey from Research to Production

Presenter: Tara Sainath

BioTara Sainath received her S.B., M.Eng and PhD in Electrical Engineering and Computer Science (EECS) from MIT. After her PhD, she spent 5 years at the Speech and Language Algorithms group at IBM T.J. Watson Research Center, before joining Google Research. She has served as a Program Chair for ICLR in 2017 and 2018. Also, she has co-organized numerous special sessions and workshops, including Interspeech 2010, ICML 2013, Interspeech 2016, ICML 2017, Interspeech 2019, NeurIPS 2020. In addition, she has served as a member of the IEEE Speech and Language Processing Technical Committee (SLTC) as well as the Associate Editor for IEEE/ACM Transactions on Audio, Speech, and Language Processing. She is an IEEE and ISCA Fellow and the recipient of the 2021 IEEE SPS Industrial Innovation Award. She is currently a Principal Research Scientist at Google, working on applications of deep neural networks for automatic speech recognition.

AbstractEnd-to-end (E2E) speech recognition has become a popular research paradigm in recent years, allowing the modular components of a conventional speech recognition system (acoustic model, pronunciation model, language model), to be replaced by one neural network. In this talk, we will discuss a multi-year research journey of E2E modeling for speech recognition at Google. This journey has resulted in E2E models that can surpass the performance of conventional models across many different quality and latency metrics, as well as the productionization of E2E models for Pixel 4, 5 and 6 phones. We will also touch upon future research efforts with E2E models, including multi-lingual speech recognition.

| Time |

Title |

Presenter |

| 8:15M - 8:20AM |

Breakfast |

| 8:15AM - 8:20AM |

Opening Speech |

| 8:20AM - 8:45AM |

(KeyNote Talk) Deploying efficient translation at every level of the stack |

|

Kenneth Heafield |

| 8:45AM - 9:30AM |

(KeyNote Talk) Simple and efficient self-training approaches for speech recognition |

|

Samy Bengio

Tatiana Likhomanenko |

| 9:30AM - 9:36AM |

(Spotlight 1) Query-Dependent Prompt Evaluation and Optimization with Offline Inverse RL |

|

Hao Sun |

| 9:36AM - 9:42AM |

(Spotlight 2) MatFormer:Nested Transformer for Elastic Inference |

|

Fnu Devvrit |

| 9:42AM - 9:48AM |

(Spotlight 3) Decoding Data Quality via Synthetic Corruptions:Embedding-guided Pruning of Code Data |

|

Yu Yang |

| 9:48AM - 9:54AM |

(Spotlight 4) FlashFFTConv:Efficient Convolutions for Long Sequences with Tensor Cores |

|

Dan Fu |

| 9:54AM - 10:00AM |

(Spotlight 5) Ensemble of low-rank adapters for large language model fine-tuning |

|

Xi Wang |

| 10:00AM - 10:30AM |

Morning Break and Poster Setup |

| 10:30AM - 11:00AM |

(KeyNote Talk) Branch-Train-Merge:Embarrassingly Parallel Training of Expert Language Models |

|

Luke Zettelmoyer |

| 11:00AM - 11:30AM |

(KeyNote Talk) Knowledge Consolidation and Utilization (In)Ability of Large Language Models |

|

Sarath Chandar |

| 11:30AM - 11:36AM |

(Spotlight 6) LoDA:Low-Dimensional Adaptation of Large Language Models |

|

Jing Liu |

| 11:36AM - 11:42AM |

(Spotlight 7) MultiPrompter:Cooperative Prompt Optimization with Multi-Agent Reinforcement Learning |

|

Dong-Ki Kim |

| 11:42AM - 11:48PM |

(Spotlight 8) LoftQ:LoRA-Fine-Tuning-Aware Quantization for Large Language Models |

|

Yixiao Li |

| 11:48AM - 11:54PM |

(Spotlight 9) Improving Linear Attention via Softmax Mimicry |

|

Michael Zhang |

| 11:54AM - 12:00PM |

(Spotlight 10) PaSS:Parallel Speculative Sampling |

|

Giovanni Monea |

| 12:00PM - 1:00PM |

Lunch Break |

| 1:00PM - 2:00PM |

Poster Session I (Paper IDs:# 1-45) |

| 2:00PM - 2:30PM |

(KeyNote Talk) LLMs for Protein Design:A Research Journey |

|

Ali Madani |

| 2:30PM-3:00PM |

(KeyNote Talk) End-to-End Speech Recognition:The Journey from Research to Production |

|

Tara Sainath |

| 03:00PM - 03:20PM |

Afternoon Break and Poster Setup II |

| 3:20PM - 4:10PM |

Interactive Panel Discussion |

- Nazneen Rajani

- Minjia Zhang

- Tim Dettmers

|

| 4:10PM-4:15PM |

Best Paper and Poster Awards |

| 4:15PM - 5:15PM |

Poster Session II (Paper IDs:# 46-96) |

Organizers

Mehdi Rezagholizadeh

Huawei Noah's Ark Lab

Yue Dong

University of California

Lili Mou

University of Alberta

Qun Liu

Huawei Noah's Ark Lab

Boxing Chen

Huawei Noah's Ark Lab

Volunteers

Khalil Bibi

Huawei Noah's Ark Lab

Technical Committee

- Dan Alistarh (Institute of Science and Technology Austria)

- Bang Liu (University of Montreal (UdM))

- Hassan Sajjad (Dalhousie University)

- Tiago Falk(INRS University)

- Yu Cheng (Microsoft)

- Anderson R. Avila (INRS University)

- Peyman Passban (BenchSci)

- Rasoul Kaljahi (Oracle)

- Joao Monteiro (Service Now)

- Shahab Jalalvand (Interactions)

- Ivan Kobyzev (Huawei Noah's Ark Lab)

- Aref Jafari (University of Waterloo)

- Jad Kabbara (MIT)

- Ahmad Rashid (University of Waterloo)

- Ehsan Kamalloo (University of Alberta)

- Abbas Ghaddar (Huawei Noah's Ark Lab)

- Marzieh Tahaei (Huawei Noah's Ark Lab)

- Soheila Samiee (BASF)

- Hamidreza Mahyar (McMaster University)

- Flávio Ávila (Verisk Analytics)

- Peng Lu (UdeM)

- Xiaoguang Li (Huawei Noah's Ark Lab)

- Mauajama Firdaus (University of Alberta)

- David Alfonso Hermelo (Huawei Noah's Ark Lab)

- Ankur Agarwal (Huawei Noah's Ark Lab)

- Khalil Bibi (Huawei Noah's Ark Lab)

- Tanya Roosta (Amazon)

- Tianyu Jiang (University of Utah, US)

|

- Juncheng Yin (Western University, Canada)

- Jingjing Li (Alibaba)

- Meng Cao (McGill and Mila, Canada)

- Wen Xiao (UBC, Canada)

- Chenyang Huang (University of Alberta)

- Sunyam Bagga (Huawei Noah's Ark Lab)

- Mojtaba Valipour (University of Waterloo)

- Lili Mou (University of Alberta)

- Yue Dong (University of California, Riverside)

- Makesh Sreedhar (NVIDIA)

- Vahid Partovi Nia (Huawei Noah's Ark Lab)

- Hossein Rajabzadeh (University of Waterloo)

- Mohammadreza Tayaranian (McGill University)

- Suyuchen Wang (MILA)

- Alireza Ghaffari (Huawei Noah's Ark Lab)

- Mohammad Dehghan (Huawei Noah's Ark Lab)

- Crystina Zhang (University of Waterloo)

- Parsa Kavehzadeh (York University)

- Ali Edalati (Huawei Noah's Ark Lab)

- Nandan Thakur (University of Waterloo)

- Heitor Guimarães (INRS University)

- Amirhossein Kazemnejad (McGill University/MILA)

- Hamidreza Saghir (Microsoft)

- Yuqiao Wen (University of Alberta)

- Ning Shi (University of Alberta)

- Peng Zhang (Tianjin University)

- Arthur Pimentel (INRS University)

|

Diamond Sponsors

Platinum Sponsor

Gold Sponsor